1. Introduction [link id=”1d968″]

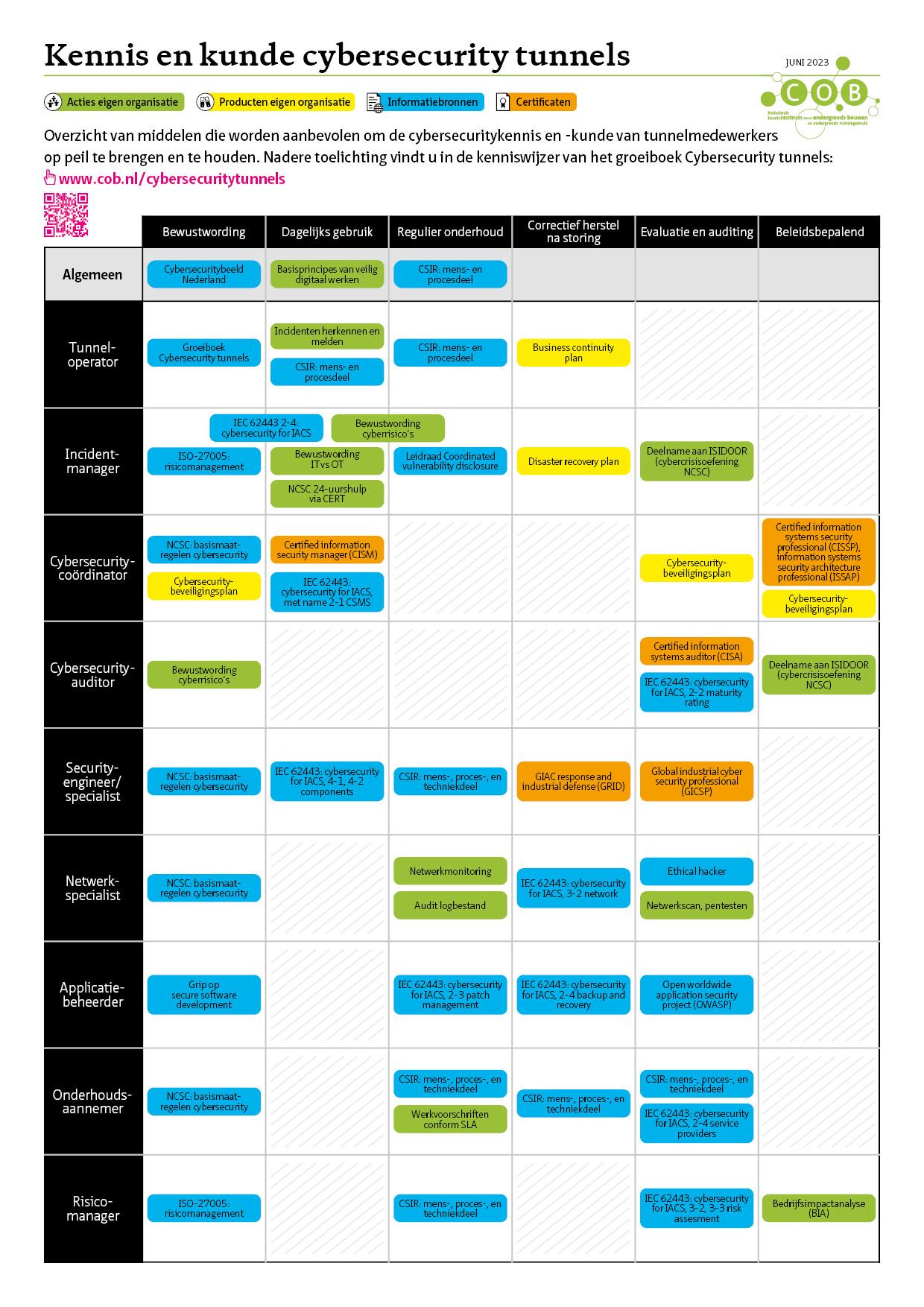

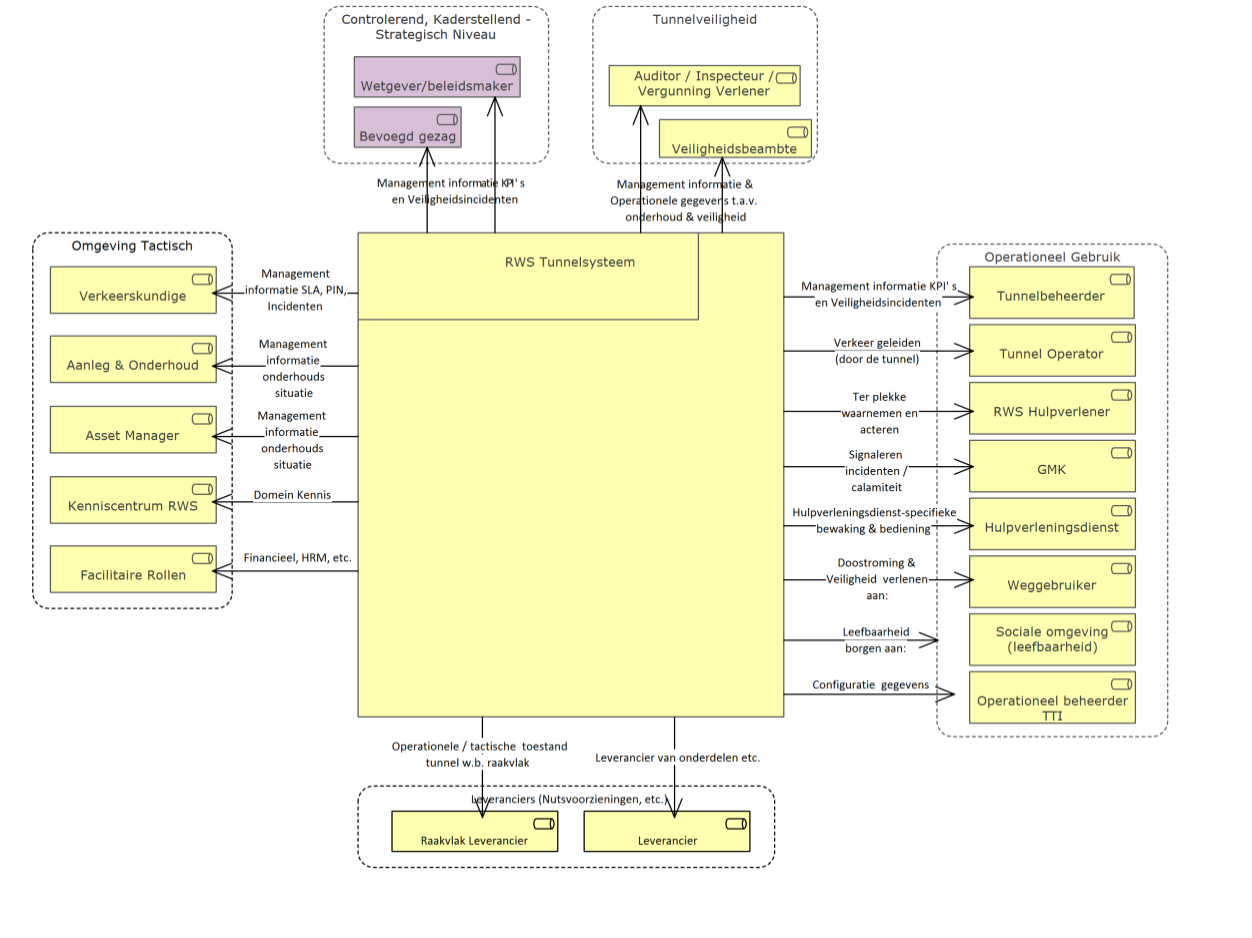

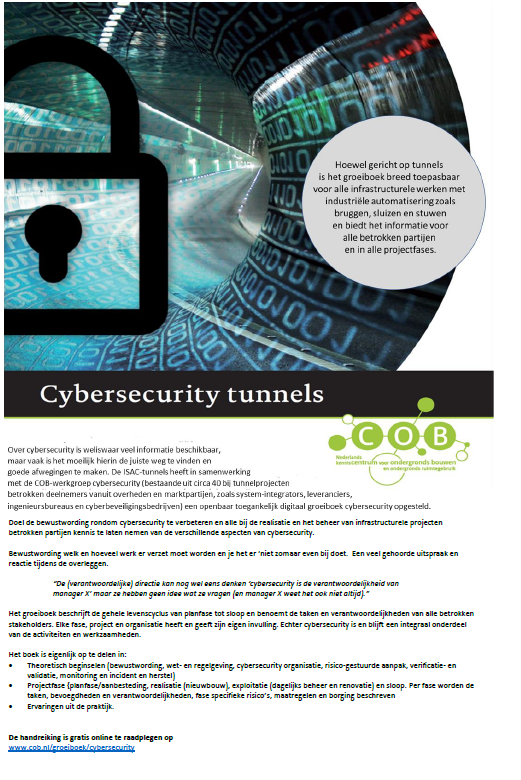

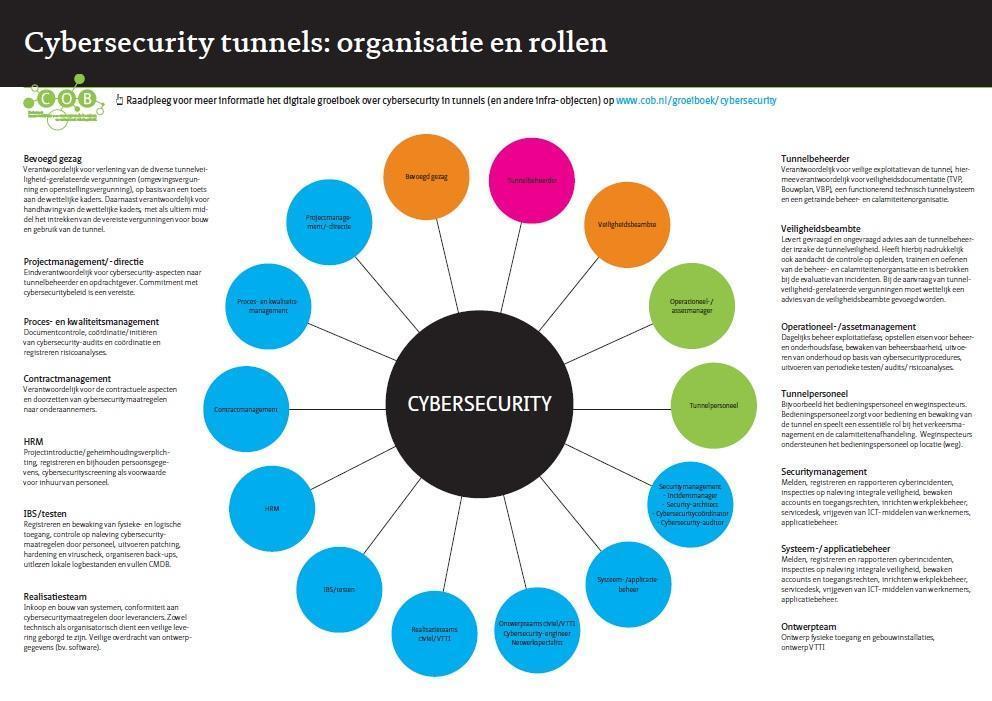

A number of important points of attention from this living document have been summarised in a fact sheet and on the posters ‘Guidance on cybersecurity for (tunnel) managers’ and ‘Cybersecurity and organisation relationship diagram’. You can download the (Dutch) products free of charge.

>> Fact sheet corresponding to the living document version 1 (pdf, 525 KB)

>> Cybersecurity guide for (tunnel) managers (pdf, 133 KB)

>> Fact sheet corresponding to the living document version 2 (pdf, 555 KB)

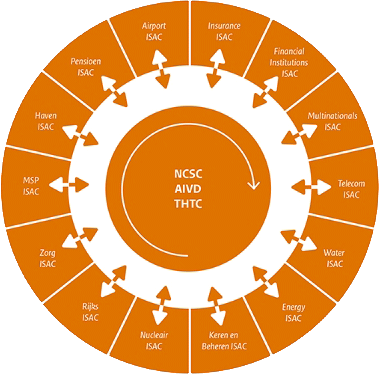

>> Cybersecurity and organisation relationship diagram (pdf, 85 KB)

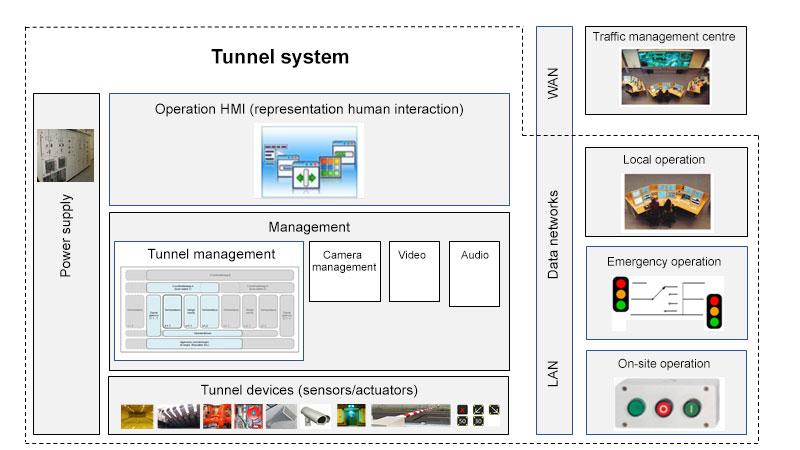

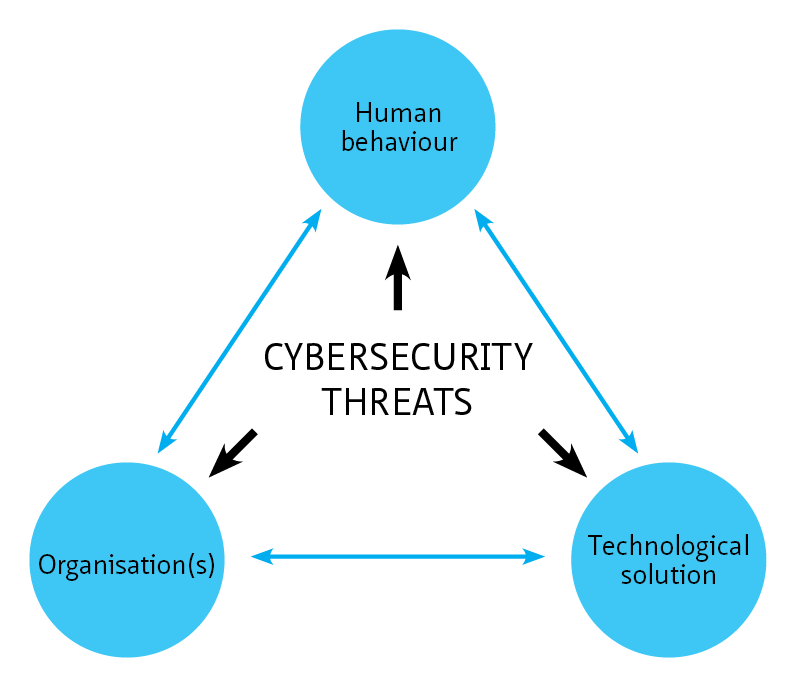

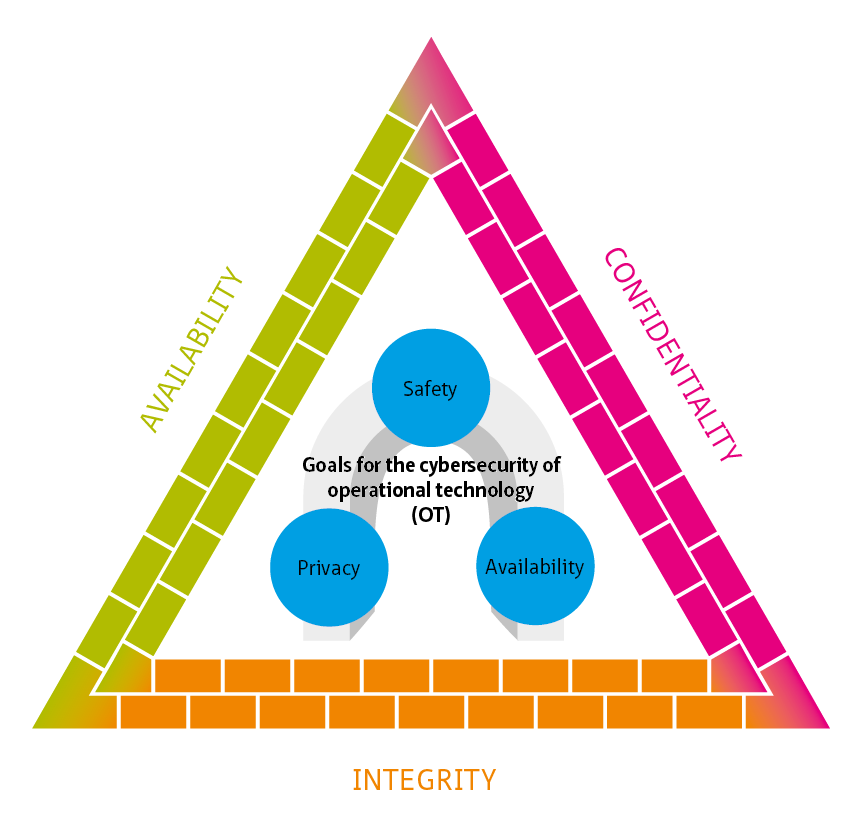

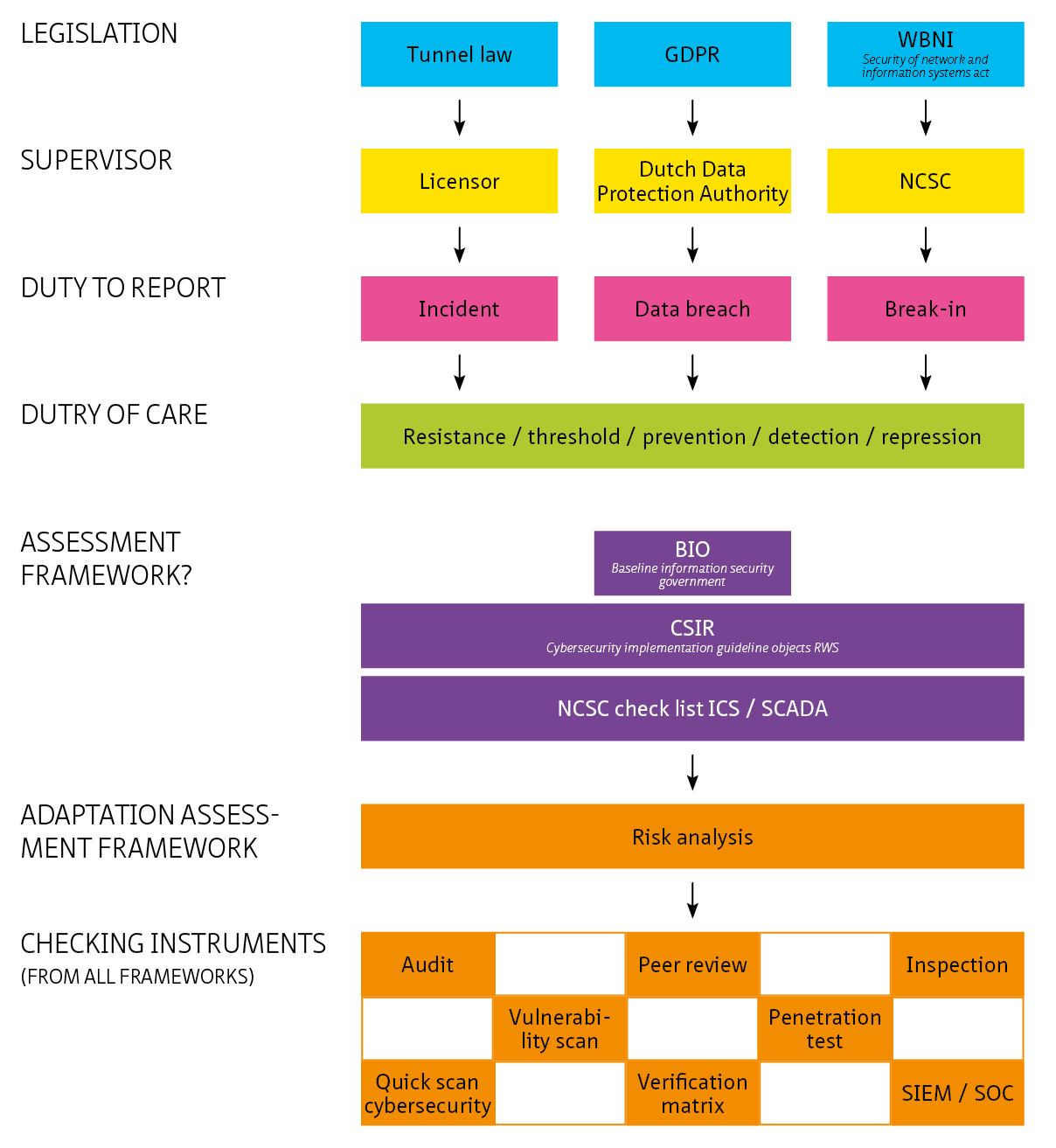

This living document focuses on cybersecurity in the context of safety, availability and privacy in infrastructure. Although the document is specifically aimed at tunnels, the content is widely applicable to all infrastructural works and objects with (industrial) automation such as bridges, locks weirs and storm surge barriers.

Download pdf-version

Download pdf-version